AI-100 Exam

Most Up-to-date Designing And Implementing An Azure AI Solution AI-100 Exam

Exam Code: AI-100 (Practice Exam Latest Test Questions VCE PDF)

Exam Name: Designing and Implementing an Azure AI Solution

Certification Provider: Microsoft

Free Today! Guaranteed Training- Pass AI-100 Exam.

Also have AI-100 free dumps questions for you:

NEW QUESTION 1

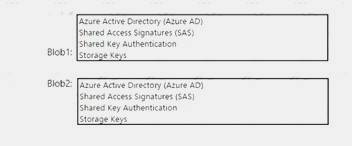

You plan to deploy an application that will perform image recognition. The application will store image data in two Azure Blob storage stores named Blob! and Blob2. You need to recommend a security solution that meets the following requirements:

•Access to Blobl must be controlled by a using a role.

•Access to Blob2 must be time-limited and constrained to specific operations.

What should you recommend using to control access to each blob store? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

- A. Mastered

- B. Not Mastered

Answer: A

Explanation:

References:

https://docs.microsoft.com/en-us/azure/storage/common/storage-auth

NEW QUESTION 2

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have Azure IoT Edge devices that generate streaming data.

On the devices, you need to detect anomalies in the data by using Azure Machine Learning models. Once an anomaly is detected, the devices must add information about the anomaly to the Azure IoT Hub stream. Solution: You expose a Machine Learning model as an Azure web service.

Does this meet the goal?

- A. Yes

- B. No

Answer: B

Explanation:

Instead use Azure Stream Analytics and REST API.

Note. Available in both the cloud and Azure IoT Edge, Azure Stream Analytics offers built-in machine learning based anomaly detection capabilities that can be used to monitor the two most commonly occurring anomalies: temporary and persistent.

Stream Analytics supports user-defined functions, via REST API, that call out to Azure Machine Learning endpoints.

References:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-machine-learning-anomaly-detection

NEW QUESTION 3

You deploy an Azure bot.

You need to collect Key Performance Indicator (KPI) data from the bot. The type of data includes:

• The number of users interacting with the bot

• The number of messages interacting with the bot

• The number of messages on different channels received by the bot

• The number of users and messages continuously interacting with the bot What should you configure?

- A. Bot analytics

- B. Azure Monitor

- C. Azure Analysis Services

- D. Azure Application Insights

Answer: A

Explanation:

References:

https://docs.microsoft.com/en-us/azure/sql-database/saas-multitenantdb-adhoc-reporting

NEW QUESTION 4

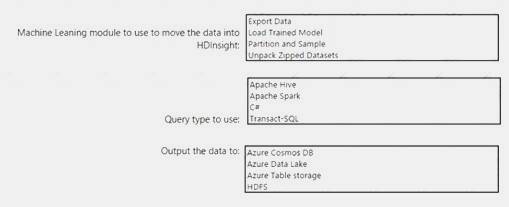

You are designing a solution that will ingest data from an Azure loT Edge device, preprocess the data in Azure Machine Learning, and then move the data to Azure HDInsight for further processing.

What should you include in the solution? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

- A. Mastered

- B. Not Mastered

Answer: A

Explanation:

Box 1: Export Data

The Export data to Hive option in the Export Data module in Azure Machine Learning Studio. This option is useful when you are working with very large datasets, and want to save your machine learning experiment data to a Hadoop cluster or HDInsight distributed storage.

Box 2: Apache Hive

Apache Hive is a data warehouse system for Apache Hadoop. Hive enables data summarization, querying, and analysis of data. Hive queries are written in HiveQL, which is a query language similar to SQL.

Box 3: Azure Data Lake

Default storage for the HDFS file system of HDInsight clusters can be associated with either an Azure Storage account or an Azure Data Lake Storage.

References:

https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/export-to-hive-query https://docs.microsoft.com/en-us/azure/hdinsight/hadoop/hdinsight-use-hive

NEW QUESTION 5

You deploy an infrastructure for a big data workload.

You need to run Azure HDInsight and Microsoft Machine Learning Server. You plan to set the RevoScaleR compute contexts to run rx function calls in parallel.

What are three compute contexts that you can use for Machine Learning Server? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

- A. SQL

- B. Spark

- C. local parallel

- D. HBase

- E. local sequential

Answer: ABC

Explanation:

Remote computing is available for specific data sources on selected platforms. The following tables document the supported combinations.

RxInSqlServer, sqlserver: Remote compute context. Target server is a single database node (SQL Server 2021 R Services or SQL Server 2021 Machine Learning Services). Computation is parallel, but not distributed.

RxSpark, spark: Remote compute context. Target is a Spark cluster on Hadoop.

RxLocalParallel, localpar: Compute context is often used to enable controlled, distributed computations relying on instructions you provide rather than a built-in scheduler on Hadoop. You can use compute context for manual distributed computing.

References:

https://docs.microsoft.com/en-us/machine-learning-server/r/concept-what-is-compute-context

NEW QUESTION 6

You plan to deploy Azure loT Edge devices that will each store more than 10,000 images locally and classify the images by using a Custom Vision Service classifier. Each image is approximately 5 MB.

You need to ensure that the images persist on the devices for 14 days. What should you use?

- A. the device cache

- B. Azure Blob storage on the loT Edge devices

- C. Azure Stream Analytics on the loT Edge devices

- D. Microsoft SQL Server on the loT Edge devices

Answer: B

Explanation:

References:

https://docs.microsoft.com/en-us/azure/iot-edge/how-to-store-data-blob

NEW QUESTION 7

You have several AI applications that use an Azure Kubernetes Service (AKS) cluster. The cluster supports a maximum of 32 nodes.

You discover that occasionally and unpredictably, the application requires more than 32 nodes. You need to recommend a solution to handle the unpredictable application load.

Which scaling method should you recommend?

- A. horizontal pod autoscaler

- B. cluster autoscaler

- C. manual scaling

- D. Azure Container Instances

Answer: B

Explanation:

To keep up with application demands in Azure Kubernetes Service (AKS), you may need to adjust the number of nodes that run your workloads. The cluster autoscaler component can watch for pods in your cluster that can't be scheduled because of resource constraints. When issues are detected, the number of nodes is increased to meet the application demand. Nodes are also regularly checked for a lack of running pods, with the number of nodes then decreased as needed. This ability to automatically scale up or down the number of nodes in your AKS cluster lets you run an efficient, cost-effective cluster.

References:

https://docs.microsoft.com/en-us/azure/aks/cluster-autoscaler

NEW QUESTION 8

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You create several AI models in Azure Machine Learning Studio. You deploy the models to a production environment.

You need to monitor the compute performance of the models. Solution: You write a custom scoring script.

Does this meet the goal?

- A. Yes

- B. No

Answer: B

Explanation:

You need to enable Model data collection. References:

https://docs.microsoft.com/en-us/azure/machine-learning/service/how-to-enable-data-collection

NEW QUESTION 9

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are deploying an Azure Machine Learning model to an Azure Kubernetes Service (AKS) container. You need to monitor the accuracy of each run of the model.

Solution: You modify the scoring file. Does this meet the goal?

- A. Yes

- B. No

Answer: B

NEW QUESTION 10

Your company has a data team of Transact-SQL experts.

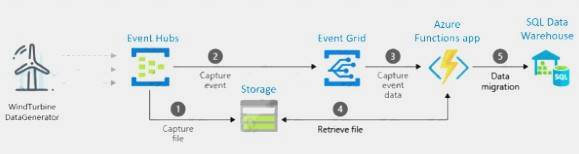

You plan to ingest data from multiple sources into Azure Event Hubs.

You need to recommend which technology the data team should use to move and query data from Event Hubs to Azure Storage. The solution must leverage the data team’s existing skills.

What is the best recommendation to achieve the goal? More than one answer choice may achieve the goal.

- A. Azure Notification Hubs

- B. Azure Event Grid

- C. Apache Kafka streams

- D. Azure Stream Analytics

Answer: B

Explanation:

Event Hubs Capture is the easiest way to automatically deliver streamed data in Event Hubs to an Azure Blob storage or Azure Data Lake store. You can subsequently process and deliver the data to any other storage destinations of your choice, such as SQL Data Warehouse or Cosmos DB.

You to capture data from your event hub into a SQL data warehouse by using an Azure function triggered by an event grid.

Example:

First, you create an event hub with the Capture feature enabled and set an Azure blob storage as the destination. Data generated by WindTurbineGenerator is streamed into the event hub and is automatically captured into Azure Storage as Avro files.

Next, you create an Azure Event Grid subscription with the Event Hubs namespace as its source and the Azure Function endpoint as its destination.

Whenever a new Avro file is delivered to the Azure Storage blob by the Event Hubs Capture feature, Event Grid notifies the Azure Function with the blob URI. The Function then migrates data from the blob to a SQL data warehouse.

References:

https://docs.microsoft.com/en-us/azure/event-hubs/store-captured-data-data-warehouse

NEW QUESTION 11

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question, you will NOT be able to return to it. As a result, these questions will not

appear in the review screen.

You have Azure IoT Edge devices that generate streaming data.

On the devices, you need to detect anomalies in the data by using Azure Machine Learning models. Once an anomaly is detected, the devices must add information about the anomaly to the Azure IoT Hub stream. Solution: You deploy Azure Functions as an IoT Edge module.

Does this meet the goal?

- A. Yes

- B. No

Answer: B

Explanation:

Instead use Azure Stream Analytics and REST API.

Note. Available in both the cloud and Azure IoT Edge, Azure Stream Analytics offers built-in machine learning based anomaly detection capabilities that can be used to monitor the two most commonly occurring anomalies: temporary and persistent.

Stream Analytics supports user-defined functions, via REST API, that call out to Azure Machine Learning endpoints.

References:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-machine-learning-anomaly-detection

NEW QUESTION 12

Your company has factories in 10 countries. Each factory contains several thousand IoT devices. The devices present status and trending data on a dashboard.

You need to ingest the data from the IoT devices into a data warehouse.

Which two Microsoft Azure technologies should you use? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- A. Azure Stream Analytics

- B. Azure Data Factory

- C. an Azure HDInsight cluster

- D. Azure Batch

- E. Azure Data Lake

Answer: CE

Explanation:

With Azure Data Lake Store (ADLS) serving as the hyper-scale storage layer and HDInsight serving as the Hadoop-based compute engine services. It can be used for prepping large amounts of data for insertion into a Data Warehouse

References:

https://www.blue-granite.com/blog/azure-data-lake-analytics-holds-a-unique-spot-in-the-modern-dataarchitectur

NEW QUESTION 13

You plan to deploy an Al solution that tracks the behavior of 10 custom mobile apps. Each mobile app has several thousand users. You need to recommend a solution for real-time data ingestion for the data originating from the mobile app users. Which Microsoft Azure service should you include in the recommendation?

- A. Azure Event Hubs

- B. Azure Service Bus queues

- C. Azure Service Bus topics and subscriptions

- D. Apache Storm on Azure HDInsight

Answer: A

Explanation:

References:

https://docs.microsoft.com/en-in/azure/event-hubs/event-hubs-about

NEW QUESTION 14

Which RBAC role should you assign to the KeyManagers group?

- A. Cognitive Services Contributor

- B. Security Manager

- C. Cognitive Services User

- D. Security Administrator

Answer: A

Explanation:

References:

https://docs.microsoft.com/en-us/azure/role-based-access-control/built-in-roles

NEW QUESTION 15

You need to configure versioning and logging for Azure Machine Learning models. Which Machine Learning service application should you use?

- A. models

- B. activities

- C. experiments

- D. pipelines

- E. deployments

Answer: E

Explanation:

References:

https://docs.microsoft.com/en-us/azure/machine-learning/service/how-to-enable-logging#logging-for-deployed-

NEW QUESTION 16

You plan to deploy two AI applications named AI1 and AI2. The data for the applications will be stored in a relational database.

You need to ensure that the users of AI1 and AI2 can see only data in each user’s respective geographic

region. The solution must be enforced at the database level by using row-level security. Which database solution should you use to store the application data?

- A. Microsoft SQL Server on a Microsoft Azure virtual machine

- B. Microsoft Azure Database for MySQL

- C. Microsoft Azure Data Lake Store

- D. Microsoft Azure Cosmos DB

Answer: A

Explanation:

Row-level security is supported by SQL Server, Azure SQL Database, and Azure SQL Data Warehouse. References:

https://docs.microsoft.com/en-us/sql/relational-databases/security/row-level-security?view=sql-server-2021

NEW QUESTION 17

You plan to implement a new data warehouse for a planned AI solution. You have the following information regarding the data warehouse:

•The data files will be available in one week.

•Most queries that will be executed against the data warehouse will be ad-hoc queries.

•The schemas of data files that will be loaded to the data warehouse will change often.

•One month after the planned implementation, the data warehouse will contain 15 TB of data. You need to recommend a database solution to support the planned implementation.

What two solutions should you include in the recommendation? Each correct answer is a complete solution. NOTE: Each correct selection is worth one point.

- A. Apache Hadoop

- B. Apache Spark

- C. a Microsoft Azure SQL database

- D. an Azure virtual machine that runs Microsoft SQL Server

Answer: AB

NEW QUESTION 18

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are developing an application that uses an Azure Kubernetes Service (AKS) cluster. You are troubleshooting a node issue.

You need to connect to an AKS node by using SSH.

Solution: You change the permissions of the AKS resource group, and then you create an SSH connection. Does this meet the goal?

- A. Yes

- B. No

Answer: B

Explanation:

Instead add an SSH key to the node, and then you create an SSH connection.

References:

https://docs.microsoft.com/en-us/azure/aks/ssh

NEW QUESTION 19

You are designing a solution that will use the Azure Content Moderator service to moderate user-generated content.

You need to moderate custom predefined content without repeatedly scanning the collected content. Which API should you use?

- A. Term List API

- B. Text Moderation API

- C. Image Moderation API

- D. Workflow API

Answer: A

Explanation:

The default global list of terms in Azure Content Moderator is sufficient for most content moderation needs. However, you might need to screen for terms that are specific to your organization. For example, you might

want to tag competitor names for further review.

Use the List Management API to create custom lists of terms to use with the Text Moderation API. The Text - Screen operation scans your text for profanity, and also compares text against custom and shared blacklists.

NEW QUESTION 20

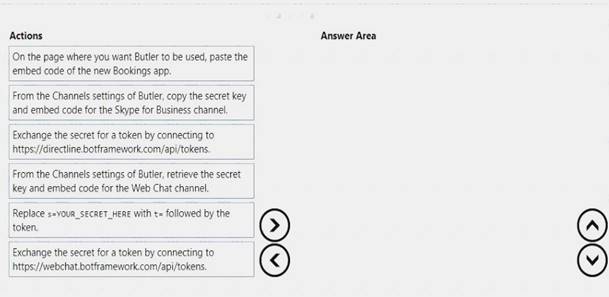

You need to integrate the new Bookings app and the Butler chatbot.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

- A. Mastered

- B. Not Mastered

Answer: A

Explanation:

References:

https://docs.microsoft.com/en-us/azure/bot-service/bot-service-channel-connect-webchat?view=azure-bot-servic

NEW QUESTION 21

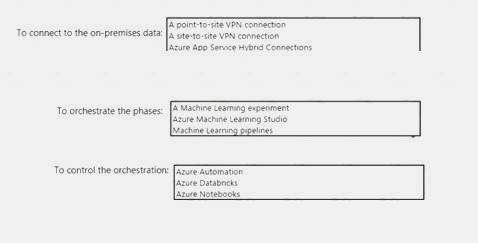

You are designing an Azure infrastructure to support an Azure Machine Learning solution that will have multiple phases. The solution must meet the following requirements:

• Securely query an on-premises database once a week to update product lists.

• Access the data without using a gateway.

• Orchestrate the separate phases.

What should you use? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

- A. Mastered

- B. Not Mastered

Answer: A

Explanation:

Box 1: Azure App Service Hybrid Connections

With Hybrid Connections, Azure websites and mobile services can access on-premises resources as if they were located on the same private network. Application admins thus have the flexibility to simply lift-and-shift specific most front-end tiers to Azure with minimal configuration changes, extending their enterprise apps for hybrid scenarios.

Incorrect Option: The VPN connection solution both use gateways. Box 2: Machine Learning pipelines

Typically when running machine learning algorithms, it involves a sequence of tasks including pre-processing, feature extraction, model fitting, and validation stages. For example, when classifying text documents might involve text segmentation and cleaning, extracting features, and training a classification model with

cross-validation. Though there are many libraries we can use for each stage, connecting the dots is not as easy as it may look, especially with large-scale datasets. Most ML libraries are not designed for distributed computation or they do not provide native support for pipeline creation and tuning.

Box 3: Azure Databricks References:

https://azure.microsoft.com/is-is/blog/hybrid-connections-preview/ https://databricks.com/glossary/what-are-ml-pipelines

NEW QUESTION 22

Your company has 1,000 AI developers who are responsible for provisioning environments in Azure. You need to control the type, size, and location of the resources that the developers can provision. What should you use?

- A. Azure Key Vault

- B. Azure service principals

- C. Azure managed identities

- D. Azure Security Center

- E. Azure Policy

Answer: B

Explanation:

When an application needs access to deploy or configure resources through Azure Resource Manager in

Azure Stack, you create a service principal, which is a credential for your application. You can then delegate only the necessary permissions to that service principal.

References:

https://docs.microsoft.com/en-us/azure/azure-stack/azure-stack-create-service-principals

NEW QUESTION 23

......

100% Valid and Newest Version AI-100 Questions & Answers shared by Surepassexam, Get Full Dumps HERE: https://www.surepassexam.com/AI-100-exam-dumps.html (New 101 Q&As)